If you've got multiple domains/subdomain pointing to a single

directory and you need to serve different robots.txt file depeding

on the domain context, you may need to consider creating a handler

that will change the content of robots.txt accordingly.

IIS configuration

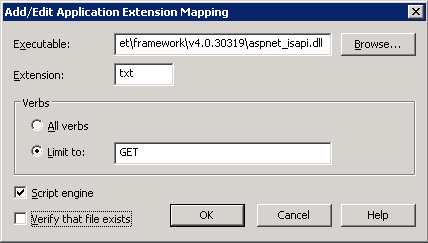

Firstly you need to let IIS to redirect all requests for .txt

files via ASP.NET engine. To do that, in IIS 6.0 right click on

selected website and select Properties. On Home Directory tab,

click on Configuration tab and find .aspx in the Application

Extensions list. Once you've found .aspx extension, click on Edit

button and copy Executable path. Go back to the list and click on

Add button. Paste executable path that you copied from .aspx

extension, enter txt as Extension, limit Verbs to GET only and

untick Verify that file exists checkbox.

web.config

You'll also need to add 3 entries to your web.config file:

<add verb="GET" path="/robots.txt" type="System.Web.UI.SimpleHandlerFactory" />

<add path="*.txt" verb="GET" type="System.Web.StaticFileHandler" />

in configuration -> system.web -> httpHandlers

and...

<add extension=".txt" type="System.Web.Compilation.WebHandlerBuildProvider" />

in configuration -> system.web -> compilation

-> buildProviders.

robots.txt handler

Now, create a new file .txt file in the root and name it robots.

I tend to use umbraco to pull the content of the robots.txt from

the database (so the content of robots.txt/per domain is CMS

managable), however you may want to hardcode it or store it in your

own database.

<%@ webhandler Language="C#" Class="Robots" %>

using System;

using System.Web;

using System.Configuration;

public partial class Robots : IHttpHandler

{

public bool IsReusable

{

get

{

return false;

}

}

public void ProcessRequest(HttpContext context)

{

var robotsContent = string.Empty;

try {

HttpResponse response = context.Response;

HttpRequest request = context.Request;

response.ContentType = "text/plain";

response.Clear();

response.BufferOutput = true;

if (request.Url.Host == null) return;

switch(request.Url.Host)

{

case "xxx.com" :

robotsContent = "XXX";

break;

case "yyy.xxx.com":

robotsContent = "YYY";

break;

default:

robotsContent = "XXX";

break;

}

}

catch {

robotsContent = "An error occured.";

}

response.Write(robotsContent);

}

}

Tagged: .NET 4

C#

configuration

IIS

IIS 7

internet information services

ISAPI

robots.txt

search engine optimisation